Motivation & Contributions

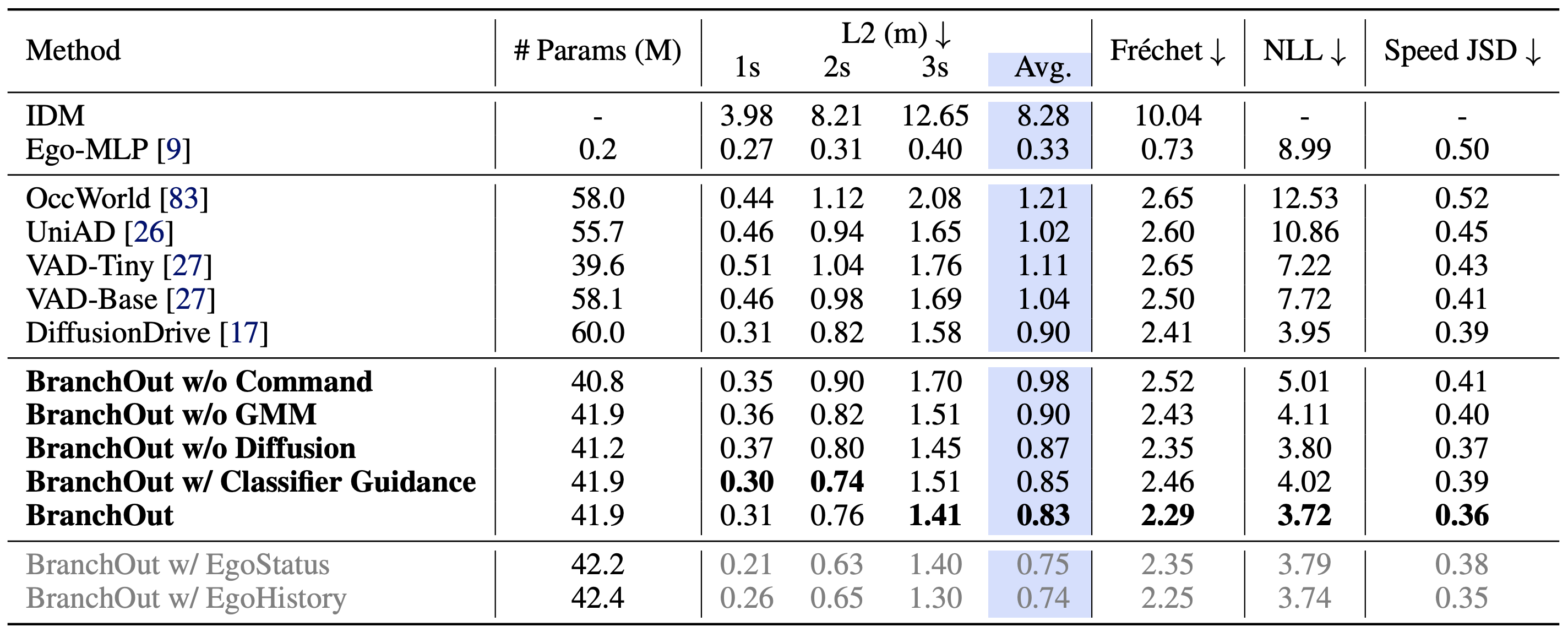

Real-world driving involves multiple plausible and safe decisions in a given scenario. Yet, many current planners are deterministic or exhibit mode collapse, and most datasets provide only a single ground truth per scene, penalizing other feasible alternatives. BranchOut advances both modeling and evaluation of realistic multimodality in planning.

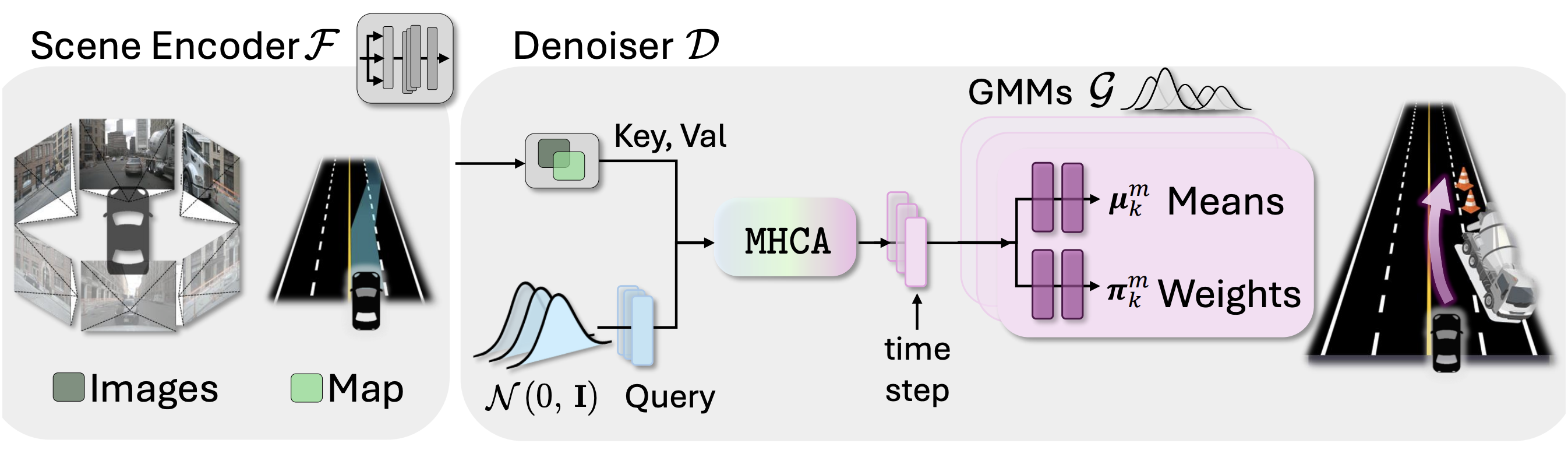

- GMM-based Diffusion Planner. An end-to-end, scene-conditioned diffusion model with a branched GMM head that outputs multiple trajectory hypotheses, improving multimodal coverage and realism.

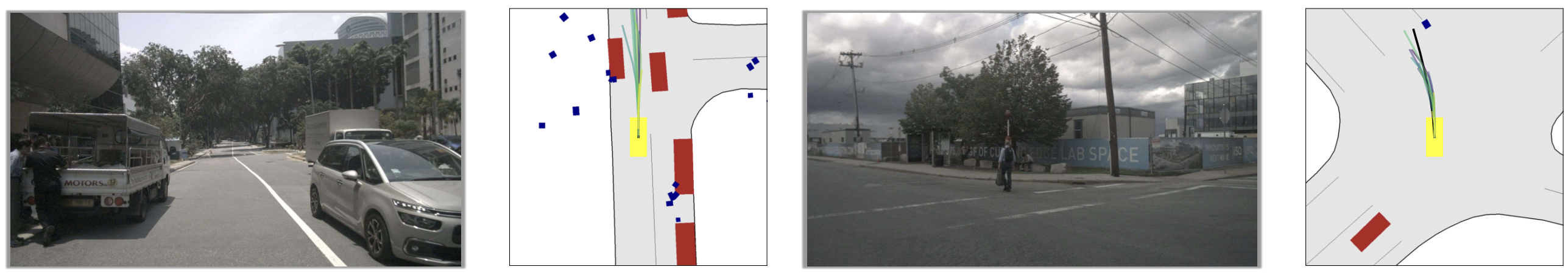

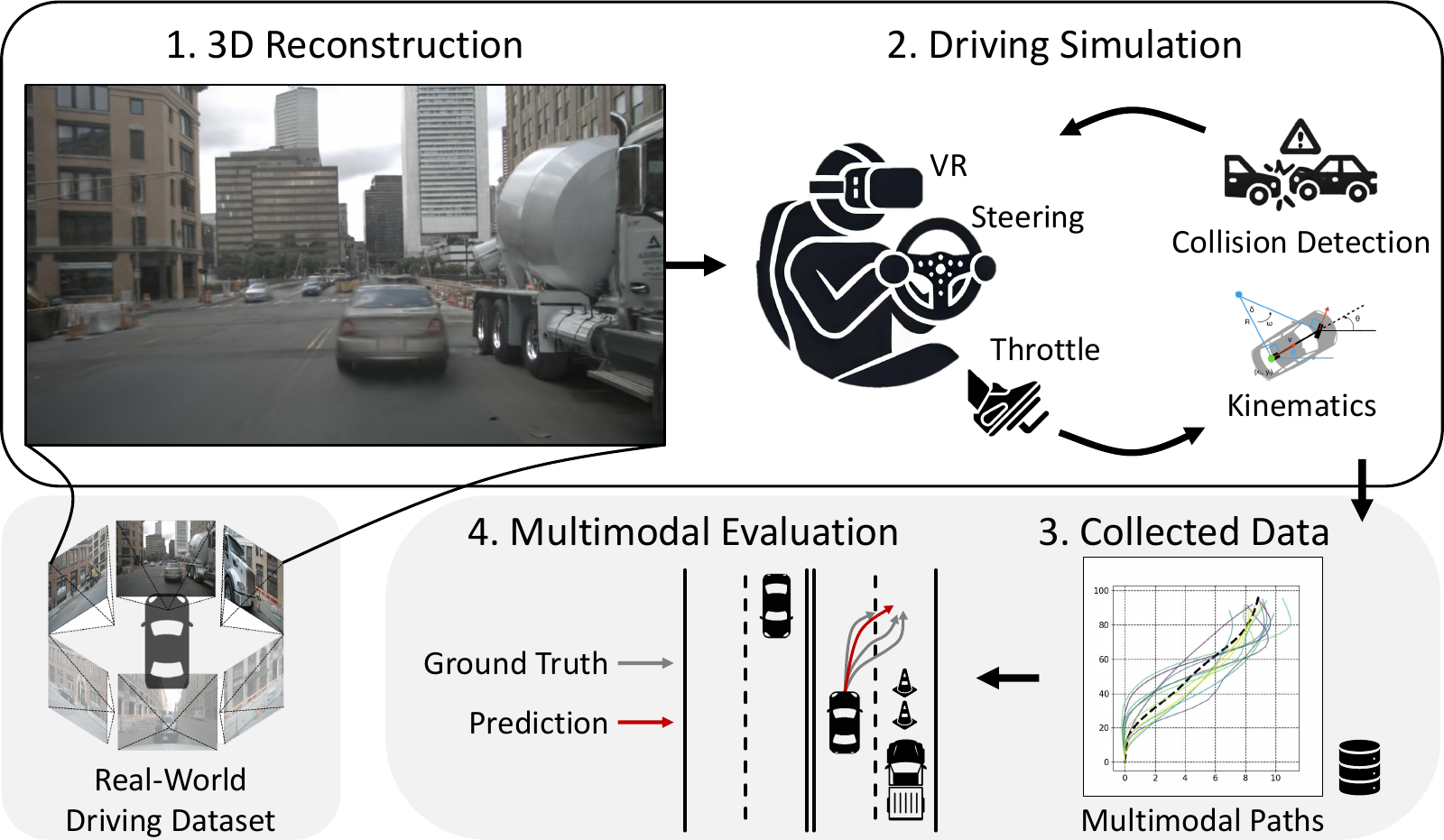

- Human-in-the-Loop Multimodal Benchmark. A photorealistic, closed-loop re-driving framework that collects diverse, human trajectories per scene, which as been validated against real-world logs and complemented by a virtual digital-twin setup.

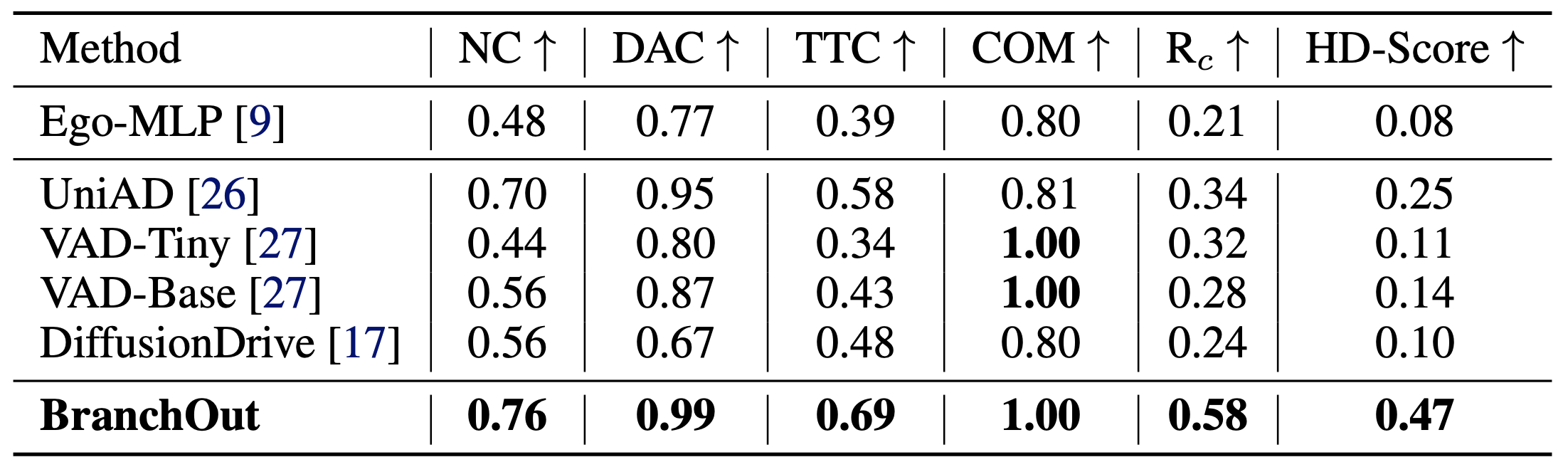

- State-of-the-art Results. Strong open-loop and closed-loop performance, and improved distributional alignment (NLL/JSD), while using compact sampling (one sample per command).